Navigating the Local Maze: A Deep Dive into Local Generative AI Results

As a marketer, you’re likely keeping a pulse on the latest artificial intelligence (AI) developments. One of the most important ways AI is impacting marketing is through local search. Today, several generative AI (genAI) tools produce output based on a user’s questions, including answers to local queries.

So, what happens when someone uses genAI for a local search? What if someone searches for “Mexican food near me” or “Starbucks near me”? As genAI becomes more popular every day, is your brand optimized for those types of searches?

Keeping track of what’s happening on all the different AI-powered platforms can get confusing. That’s where this blog comes in!

We conducted research across six top genAI tools and analyzed what search results look like for a range of local searches.

We’ll break down the top findings from our research, differences across technology, and what your brand can do to stay on top of the latest developments.

Introducing the Methodology and Research

Before diving into the data, let’s break down which genAI tools we used and also reveal the local search queries we used across each platform.

The six genAI tools used for this study include:

- Google Search Generative Experience (SGE)

- Google Gemini

- ChatGPT

- Bing Copilot

- Perplexity

- Meta AI

We entered six different searches on each platform, and asked a follow-up question for each. The follow-up question aimed to understand if the genAI would give results based on the first query, or if it would generate completely different results. GenAI is supposed to help answer very specific questions and support complex search journeys, so we put it to the test.

The six original queries, which all include “near me,” were:

- Mexican restaurant near me

- Grocery store near me

- Sporting goods near me

- Veterinarian near me

- Hotel near me

- Starbucks near me

An example of the follow-up question we asked for a “Mexican restaurant near me” was, “Which of these restaurants are open latest on Sundays?”

For each of the genAI results, we tracked:

- The number of listings

- The details and accuracy provided in the listing

- The cited sources of information

- If the listings matched what appeared in the Google 3-Pack

We scored each tool’s effectiveness in delivering helpful local results using a 100-point scale.

Our approach involved tracking and measuring whether:

- The genAI could answer local search queries of the types noted above (20 max points)

- Results were relevant to the query intent and reasonably local to the searcher (10 max points)

- Results linked clearly to reliable sources (10 max points)

- Results contained helpful information (10 max points)

- Results contained helpful photos (10 max points)

- Results contained a map (10 max points)

- Results allowed drill down / refinement of results (10 max points)

- Results remained consistent and relevant when asking follow-up questions (20 max points)

Based on this information, we scored each platform based on its ability to conduct quality local searches.

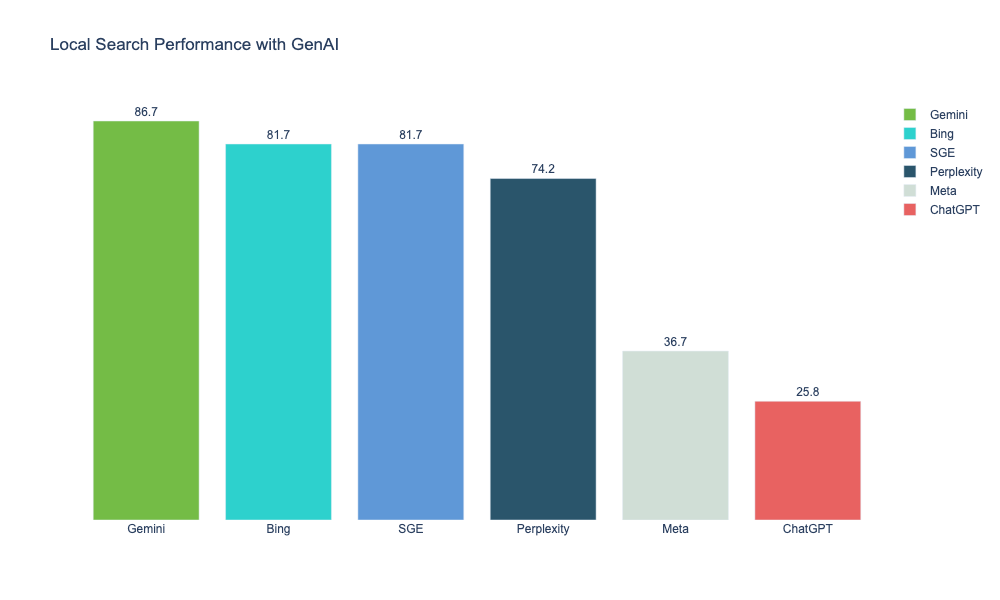

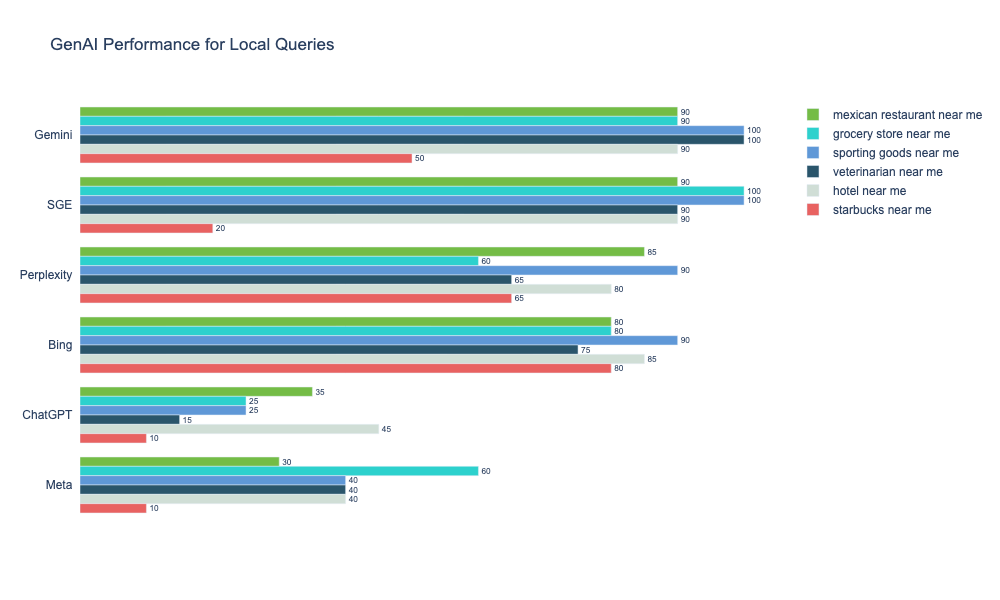

As you can see, Gemini was ranked first in our analysis, with ChatGPT-4o coming in last. Below is a breakdown of how each genAI tool performs by query.

How GenAI Tools Answer Local Queries

Let’s break down how these generative AI tools work (without getting too technical). AI chatbots rely on Large Language Models (LLMs) that are able to recognize questions and generate responses based on ingesting large amounts of data. . The more data these tools have access to, the better they can understand natural language patterns and produce human-like responses.

Today, many LLMs make use of a capability called retrieval augmented generation (RAG), which augments their output. LLMs have their own internal knowledge base to rely on, whereas a RAG system searches external sources, such as parts of the internet, to supplement the LLM.

In simple terms, genAI tools know how to connect to the internet (and other sources) in order to provide targeted answers to specific requests.

For instance, Gemini can access Google Maps, and Copilot can access Yelp. But why not give large language models (LLMs) and genAI tools access to the entire internet?

Providing access to the entire internet would allow more room for incorrect information and lessen the accuracy and reliability of genAI. By limiting its access to only certain parts of the internet, genAI can ensure its replies are reliable and trustworthy.

Digging Into the Results

Now that you understand how genAI works, let’s briefly dive into what we found for each of the genAI tools and highlight some of the most important findings! We’ll go in order of how the tools fared.

1. Gemini

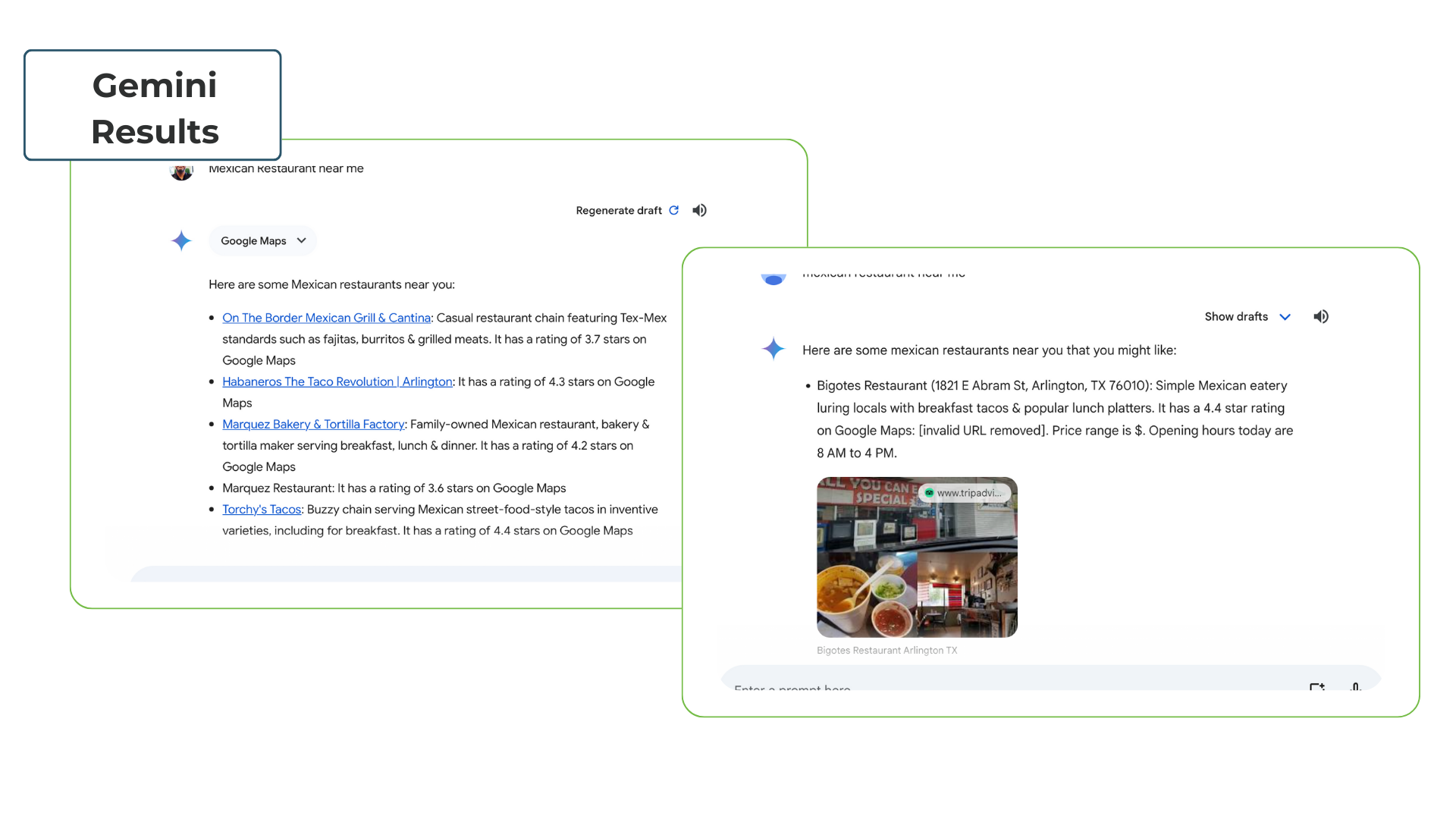

Google Gemini came in first, slightly ahead of Google SGE and Bing Copilot. It’s worth noting that Gemini did provide two different output types. One version contained bullet points with short descriptions and links, with a map below. The other version contained bullet points with photos under each entry.

There didn’t seem to be any consistent reason why you’d get one versus the other, but for the most part, they contained the same information. It is worth noting though that for both options, Google Maps was the main source of local information, which is an example of RAG in action.

Some of the main differentiators for Gemini were that it could answer all local search queries with relevant information. Additionally, the search queries included a lot of helpful information, such as photos, maps, business details, and reliable links.

Additionally, in five of the six searches, Gemini answered our follow-up questions helpfully, remembering prior responses and helping the user refine each query. If it couldn’t find an answer that matched the question, it provided instructions on figuring it out.

For instance, a follow-up for “Grocery store near me” was “Which of these grocery stores has kosher offerings?” In this case, Gemini answered, “It’s difficult to say which of these stores has kosher offerings without directly looking at their inventory.

While some larger grocery stores may carry a limited selection of kosher items, it’s less likely a gas station convenience store (All-Star Grocery) or a general supermarket (POCO LOCO SUPERMERCADO) would have a dedicated kosher section. Hula Hu Market might be a possibility, but again, it’s not guaranteed.”

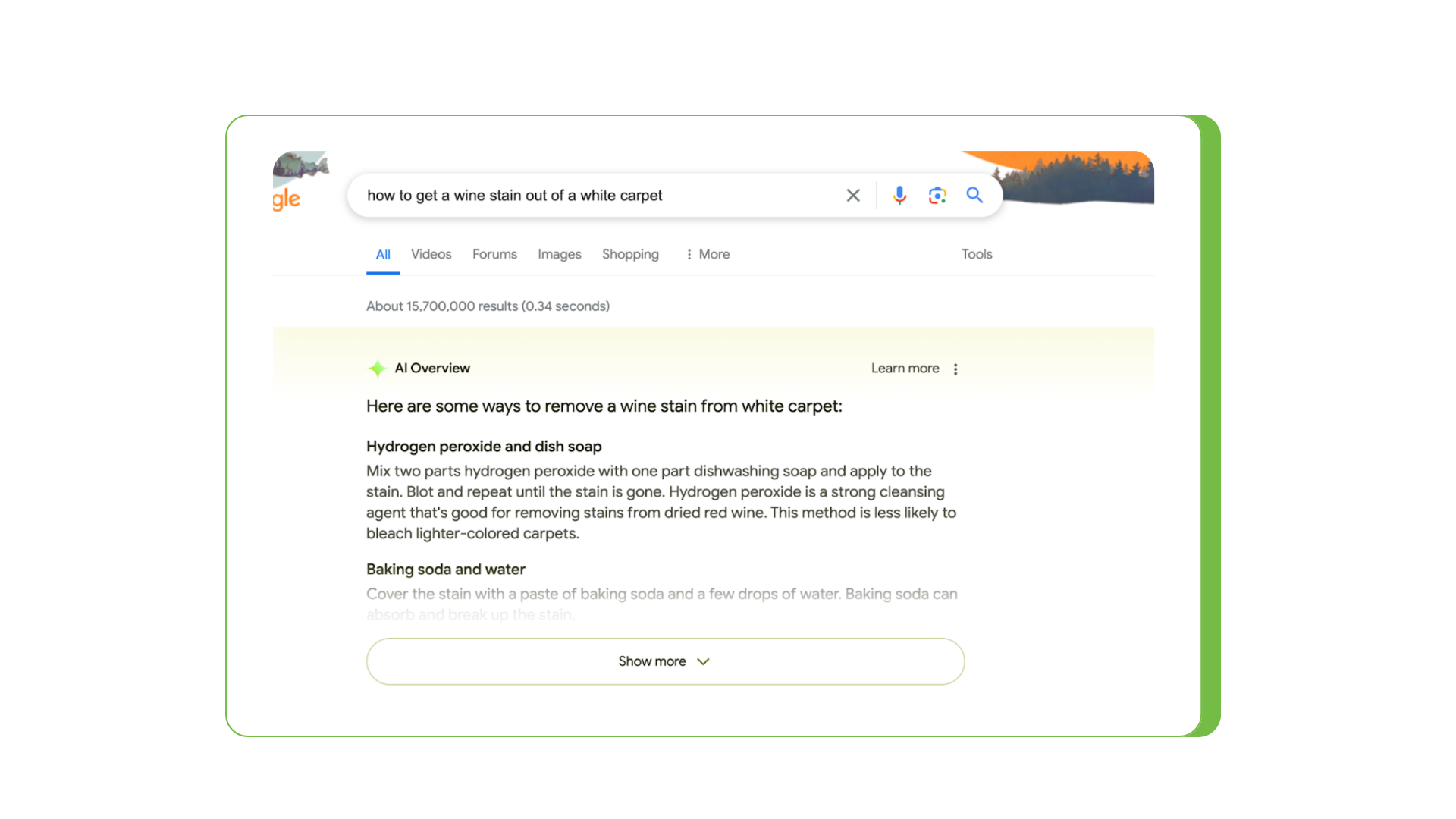

2. SGE (Now AI Overviews)

SGE, another genAI tool from Google, came in second in a tie with Bing. As with Gemini, the primary source for local information in SGE was Google itself.

Most of the search results in SGE included very detailed results such as:

- Star ratings

- A map

- General location (ex: street name)

- Photos

- A short description

- “Best” tags

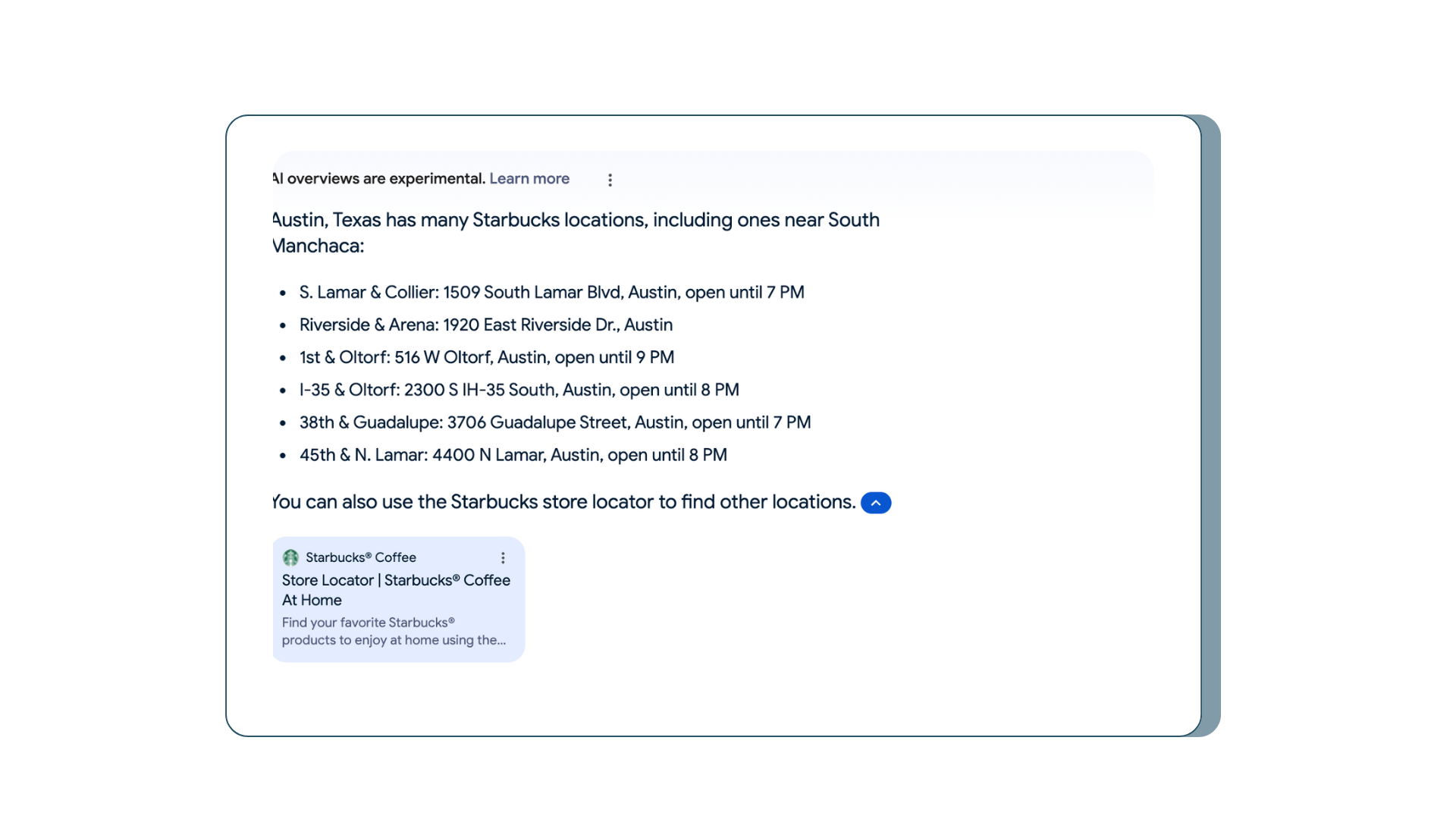

SGE also did an excellent job of answering follow-up questions, and seems to have improved considerably on this score compared to its abilities when launched in 2023. The main search that docked points from SGE was our branded local “Starbucks near me” search.

This search provided a list of Starbucks in the city in which the search was conducted, but not necessarily the closest locations. No map or images were in the search results, as seen below.

Last week, Google announced at its annual I/O event that SGE is being rebranded as “AI Overviews” and rolled it out to everyone in the U.S. who are 13 years or older. Google plans to deploy AI Overviews to more countries soon. Right now, you can’t turn AI Overviews off, as they appear for some searches. More research needs to be done on how often AI Overviews appear and for what types of queries.

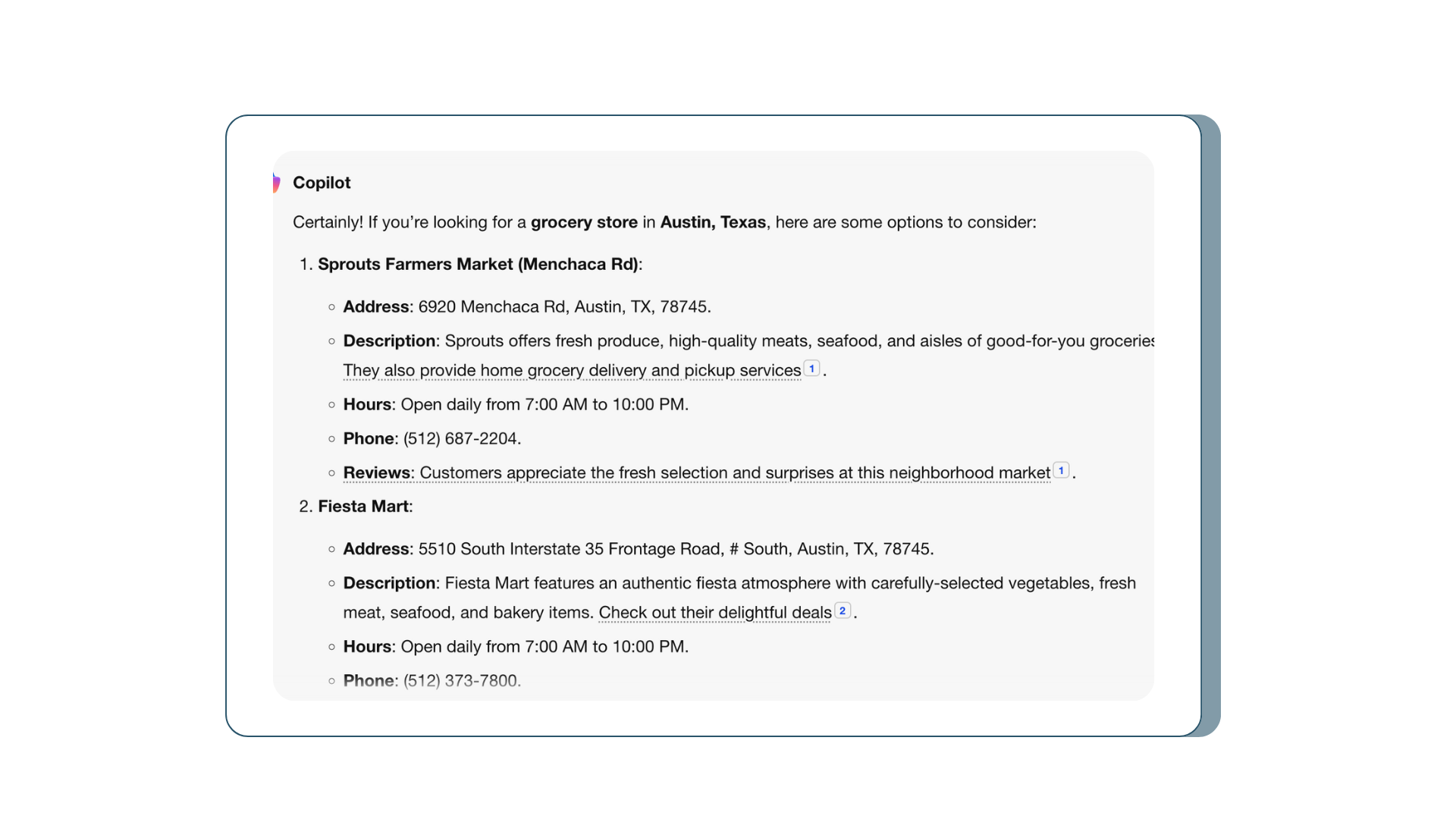

3. Bing Copilot

As for Bing Copilot, the results were consistent, but there are a few reasons it tied for second place. Below, you’ll find an image of what a search result on Bing looks like.

As you can see, the results were listed in a listicle form on Bing, and then at the bottom of the search results was a map with more Google-like listings.

Here are some of the high-level findings we found when using Bing:

- The first set of results were very detailed. They included:

- An address

- Descriptions

- A phone number

- Hours

- Reviews

- Photos and maps appeared at the bottom of the SERP

- It typically did a good job with answering the follow-up questions, with a few exceptions

Notably, only a few of the search results on Bing matched those from Google. As a brand, it’s essential to assess how you’re appearing in Copilot since its results are as strong as SGE, but rely on Bing’s search engine, which differs in many details from Google’s.

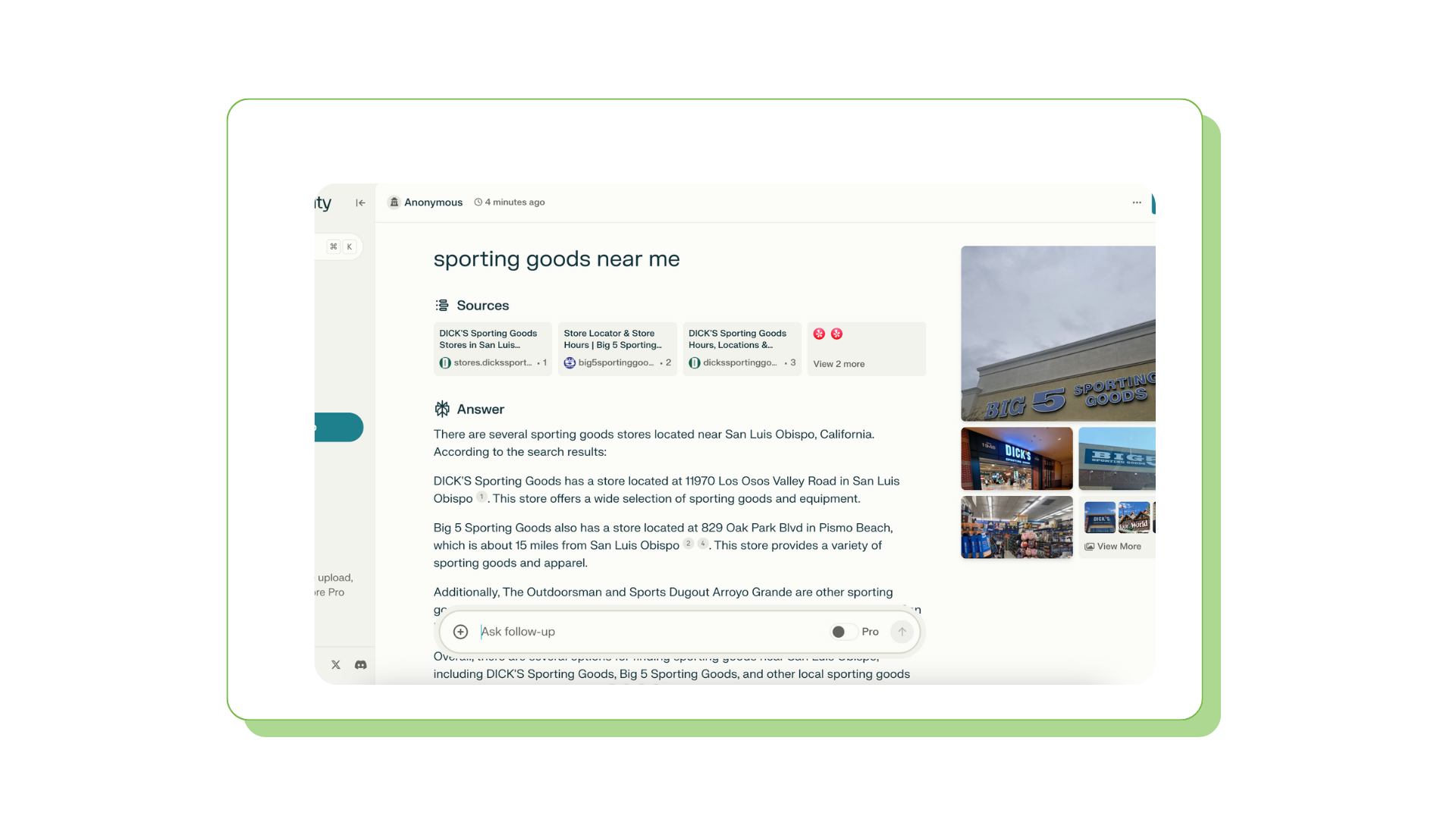

4. Perplexity

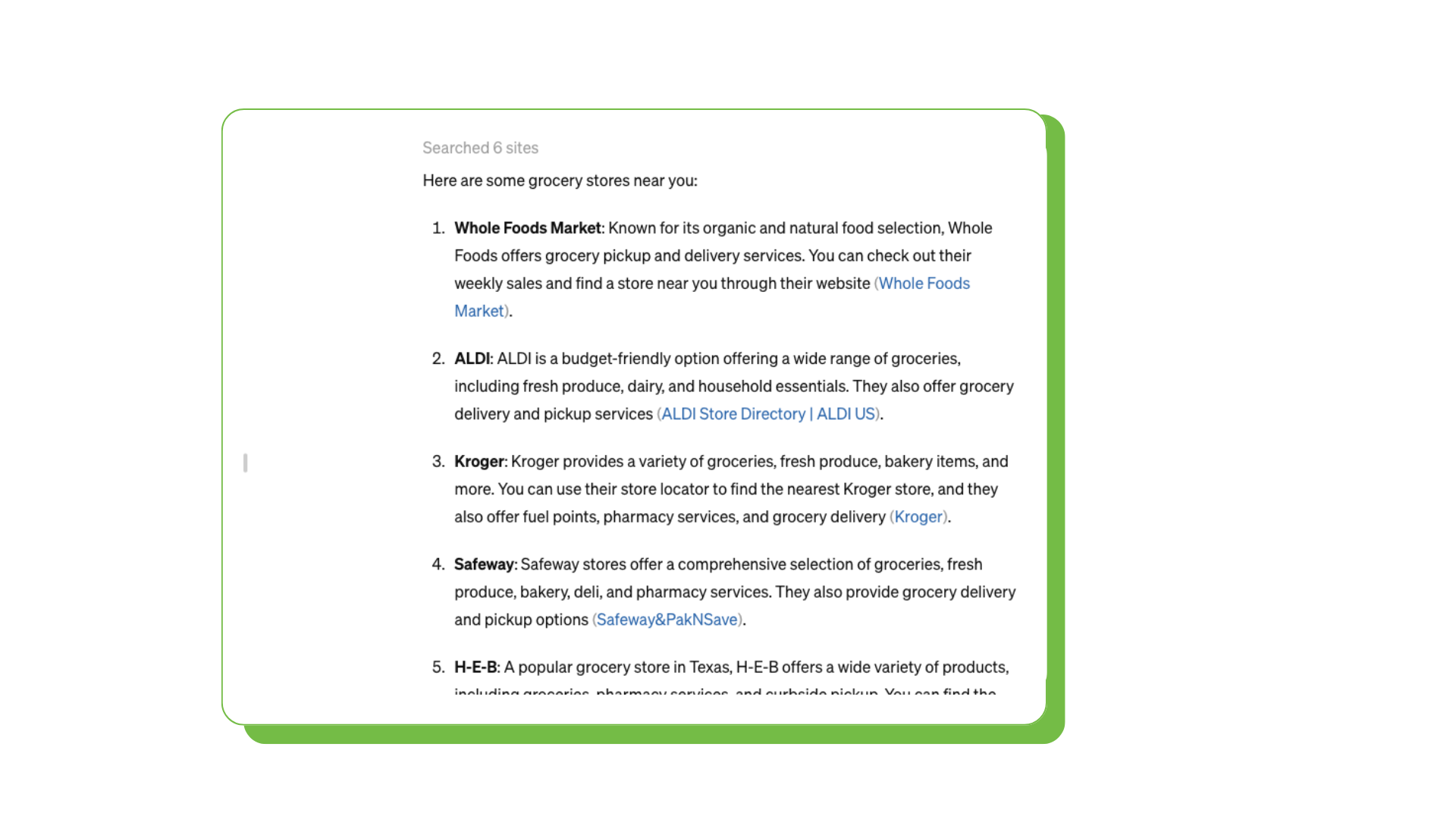

Perplexity, an upstart platform specializing in genAI for search, was in the middle of the road amongst our genAI tools. Except for “hotels near me,” all of Perplexity’s results were sourced from Yelp. While Perplexity provided detailed and relevant information for our searches, the layout was different and a bit clunkier.

As you can see, Perplexity lists its information in paragraph form rather than using a listings format and has the sources at the top. If you read through the results, you’ll see that Perplexity includes the business name and address, along with a short description.

Perplexity included a map and images of the locations for most of the results. It’s worth noting that the pictures aren’t differentiated and are all compiled together. Because Perplexity seemed to rely solely on Yelp as its source and compiled pictures together, we gave it a lower rating than a few of the other genAI tools.

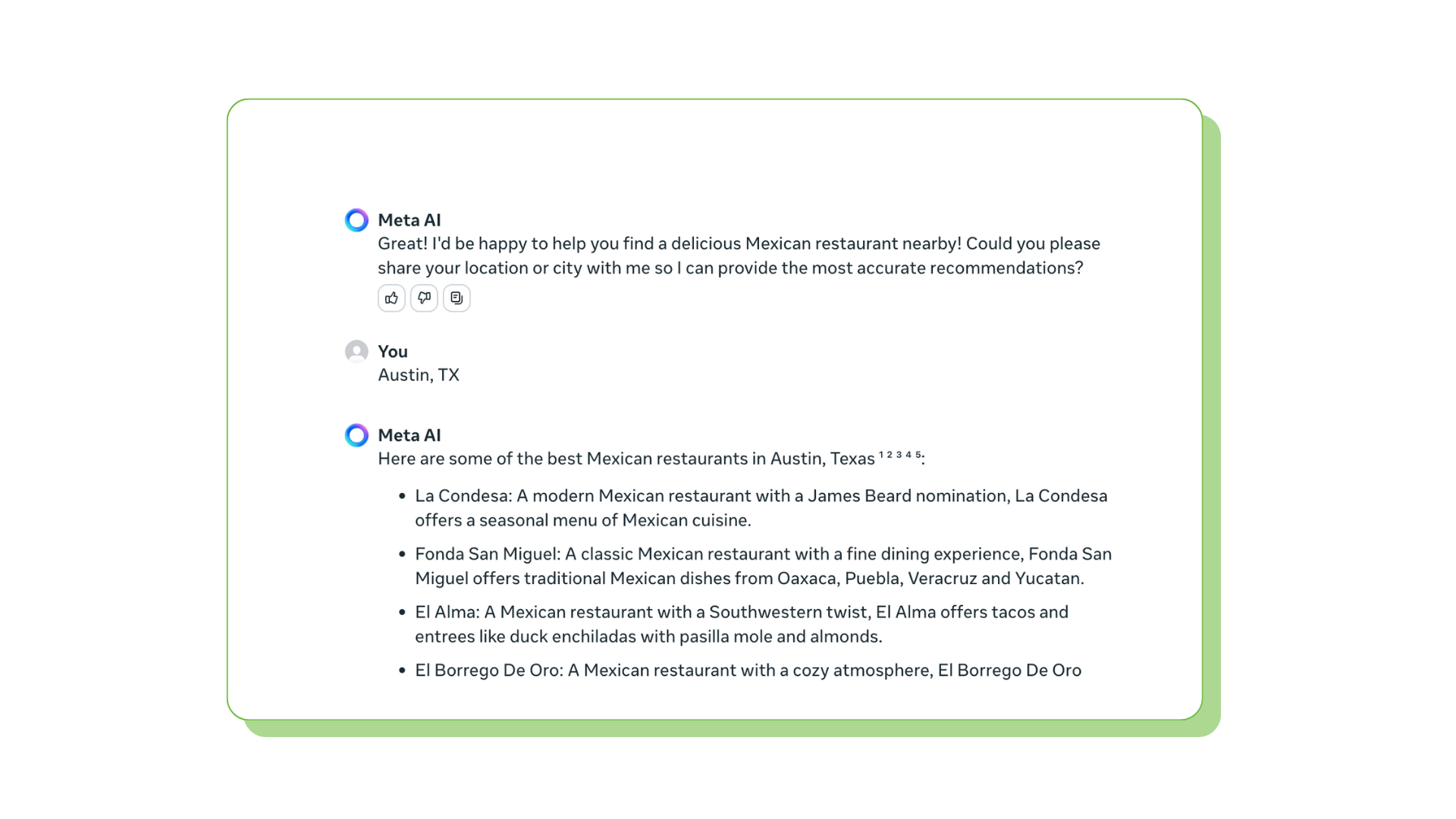

5. Meta

On April 18, Meta announced that it upgraded its chatbot with its newest large language model, Llama 3, while also saying Meta AI would be added to the search bar of Facebook, Instagram, Messenger, and WhatsApp. In addition, users can access Meta AI on a standalone site. With this iteration of Meta AI being so new, it’s no surprise that the platform scored much lower than the other tools we studied.

Meta AI gives you the option to log into a Facebook account. For this research, our team didn’t log in. When conducting the local searches, Meta AI did not know our location and had to ask for it. Additionally, the information given in the local search results was minimal.

For most of the queries, Meta only included the names of the businesses and a short description and left out any address, contact information, or ratings and reviews, as seen in the image below.

Additionally, none of Meta’s results matched the Google 3-pack or Bing’s genAI results, and it was very inconsistent in its ability to answer follow-up questions. For instance, when asked about a grocery store with kosher offerings, it listed several stores with consistent offerings.

On the other hand, when asked which Starbucks locations have a drive-through, Meta recommended we check out the Starbucks website. Because this version of Meta AI is so new, we expect the output of results to develop and evolve. For now though, it earned a lower rating.

The bigger picture here is that across the board, genAI tools rank listings in a different way than the more familiar manner of the Google 3-pack and local results. These differences are likely due to LLMs interpreting queries in a different way than more traditional algorithms, though the reasons for these differences are not yet entirely clear and may not be able to be cataloged in as straightforward a manner as search ranking factors.

It’s clear, however, that LLMs are looking for detailed, often highly specific answers and need rich data in order to provide them. Brands that supply a full and complete range of information on local platforms will be more likely to be found across the broader range of queries LLMs support.

6. ChatGPT

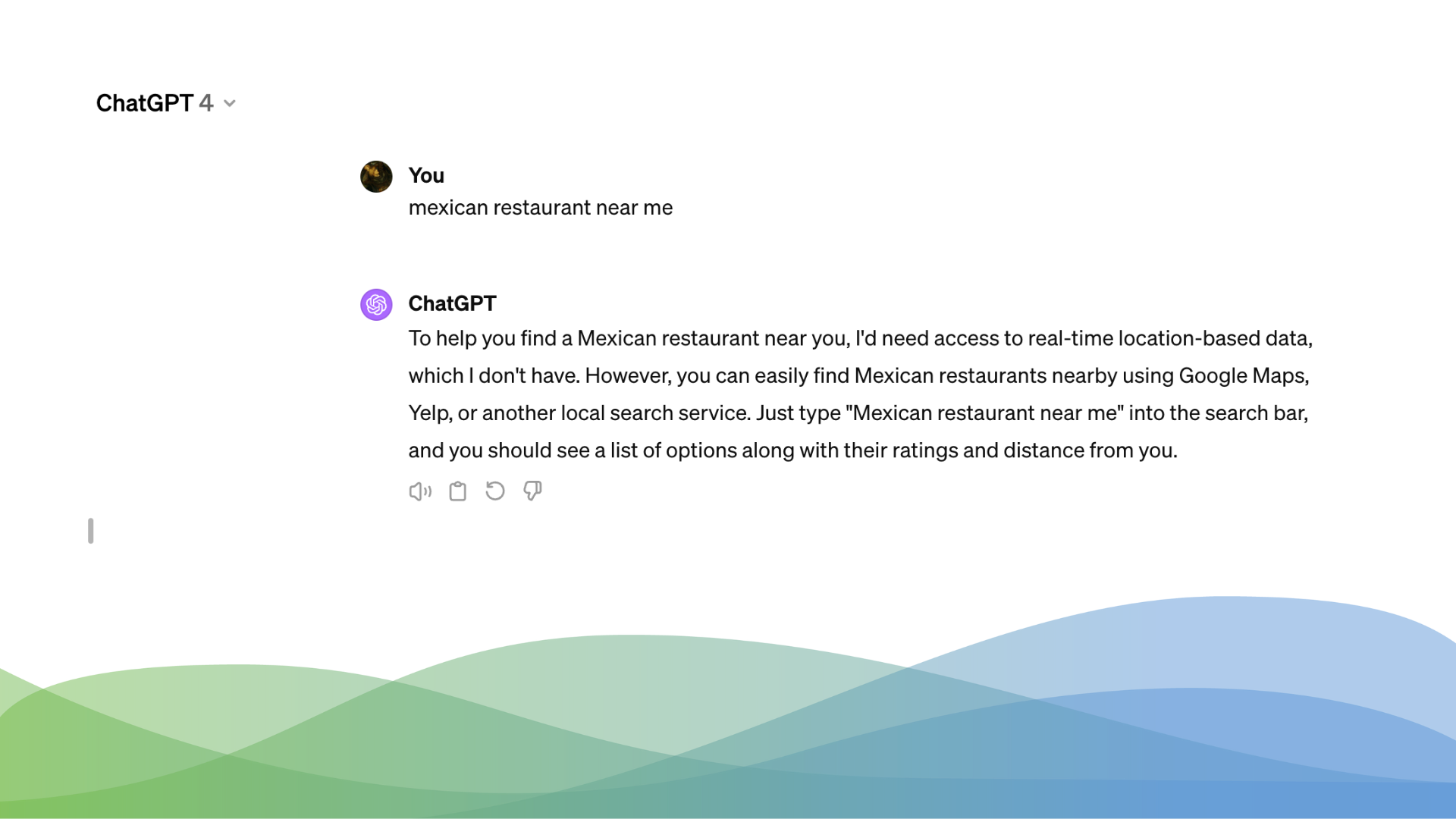

OpenAI’s ChatGPT came in last. When we first conducted this research, ChatGPT-3.5, OpenAI’s free version, couldn’t provide search results for the “near me” queries we tested. When using ChatGPT 3.5, we got messages like the one below.

However, we received mixed results when testing OpenAI’s newly released GPT-4o with ChatGPT. The chatbot could answer our search queries but didn’t know our precise location. So, despite living in Arroyo Grande, California, we received recommendations for grocery stores nationwide, including H-E-B in Texas.

This isn’t to say that ChatGPT can’t help with local searches at all, but unlike all other chatbots in our study, it’s unable to support the common “near me” searches we used.

It’s also worth pointing out that ChatGPT’s sources came primarily from store locators and brand websites. The only other sources listed were Tripadvisor for our restaurant-focused search and Hotels.com for our hotel search.

It’s worth continuing to monitor how different versions of ChatGPT manage local searches and how it advances.

What These Results Mean for Your Brand

Now that you have all of this information, what’s next? As mentioned, it’s easy to feel like you’ve lost track of what’s happening on genAI platforms. SOCi is here to keep you informed!

For instance, our weekly Local Memo blogs break down the latest news in local search and social, often covering genAI technology updates.

Not only that, but we have over a decade of experience with local SEO and have incorporated AI into our CoMarketing Cloud to make managing your local businesses a breeze. With SOCi Genius, your brand can improve its online local marketing effort at scale, including its online visibility. Ensuring your local presence is optimized will increase the likelihood of appearing in these genAI local searches.

Ready to take the next step? Request a personalized demo today!